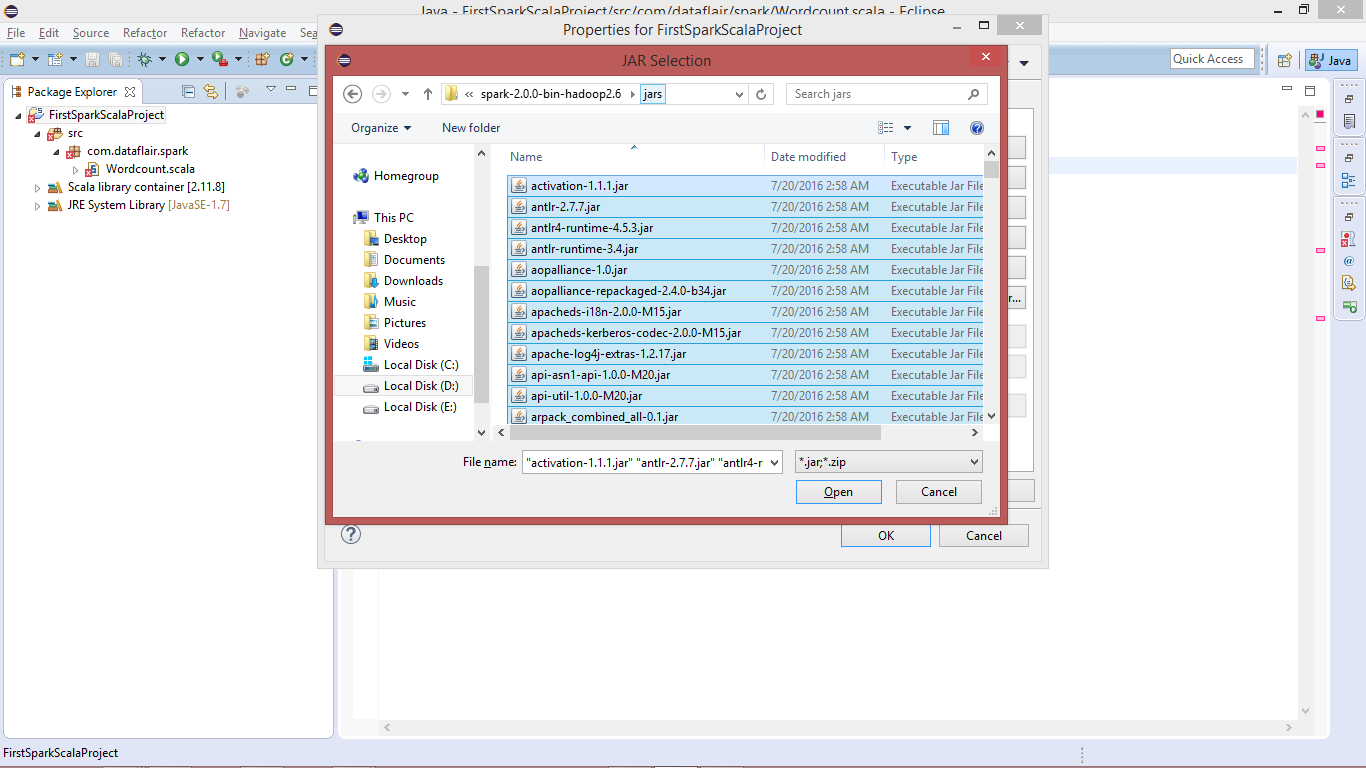

We'll show you how to use this file to import third party libraries and documentation.īefore we start writing a Spark Application, we'll want to import the Spark libraries and documentation into IntelliJ. Target: When you compile your project it will go here.īuild.sbt: The SBT configuration file. The test folder should be reserved for test scripts. Most of your code should go into the main directory. For example, build.properties allows you to change the SBT version used when compiling your project. idea: These are IntelliJ configuration files. It may take a minute or two to generate all the folders needed, in the end your folder structure should look something like this: IntelliJ should make a new project with a default directory structure. Now name your project HelloScala and select your appropriate sbt and Scala versions Next, select Scala with sbt and click next.

To create a new project start IntelliJ and select Create New Project: Now that we have IntelliJ, Scala and SBT installed we're ready to start building a Spark program. Your screen should look something like this:įinally, restart IntelliJ so the plugin takes effect. To install the IntelliJ Scala plugin navigate to preferences > Plugins > Browse Repositories > search and install Scala. Installed Apache Spark Version 2.x or higher.Installed Scala Version 2.11.8 or higher.Downloaded and deployed the Hortonworks Data Platform (HDP) Sandbox.By the end of the tutorial, you'll know how to set up IntelliJ, how to use SBT to manage dependencies, how to package and deploy your Spark application, and how to connect your live program to a debugger. We will be using be using IntelliJ Version: 2018.2 as our IDE running on Mac OSx High Sierra, and since we're using Scala we'll use SBT as our build manager. For this tutorial we'll be using Scala, but Spark also supports development with Java, and Python. This tutorial will teach you how to set up a full development environment for developing and debugging Spark applications.

0 kommentar(er)

0 kommentar(er)